Piotr Pasich has been a Senior Software Developer at X-Team for many years. Piotr is currently finishing his degree, and together with his university team he recently built a face mask detection app. This is why they made it, which technologies they used, and what the end result looked like.

Tell me about this project. How did you come up with the idea?

We came up with the idea because of a simple exercise. At our university, we had to prepare an application, build equipment, or write a know-how document on a really broad topic: healthcare. It had to be something useful. We had to talk with end-users and possible customers to understand their needs and ultimately fulfill those needs. Just like what you do in a startup, except on a smaller scale.

During a brainstorming session, my fellow students and I spoke about our experience with the pandemic. I talked about the new formalities that the pandemic had introduced at places like a civic center. Currently, people need to register for an appointment at a specific hour. Someone at the door lets in those who have an appointment and checks if they have a mask on.

As a developer of many years, I thought it would be good automate that process and allow that person to do more important and exciting work.

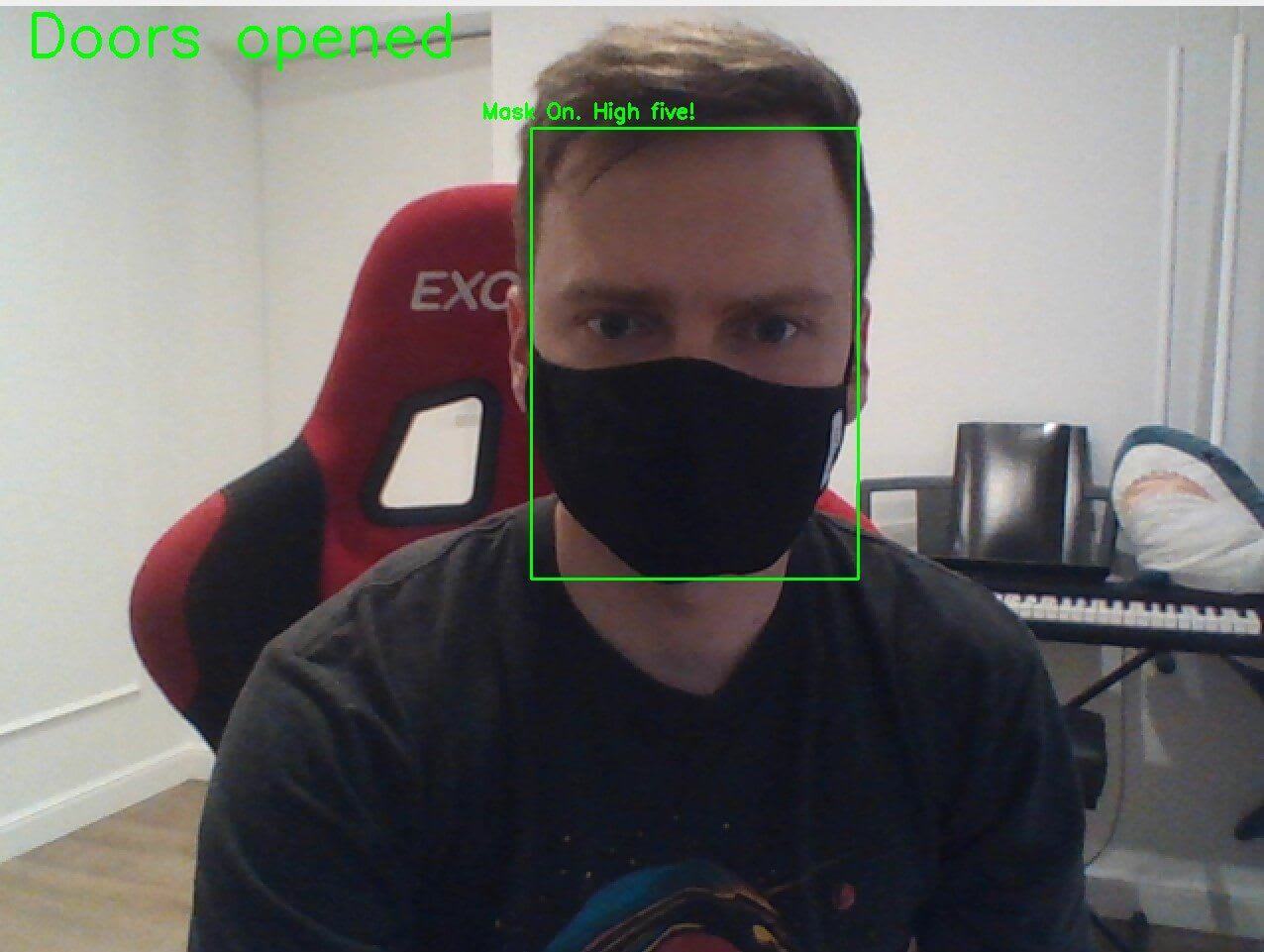

We were lucky to have a friend who works in a town hall, so we could speak to him and understand his expectations about such a project. We learned that the civil service was already using QR codes in some of their offices for appointments, but they still had to check if people had their masks on and count the number of people in the building. That became the core idea of our project.

Sounds like a great idea! What technologies did you use to build this?

We had a tight deadline. Three sprints of two weeks, so six weeks in total. We were also limited in our knowledge of artificial intelligence (AI). So we opted for:

- Python as the obvious choice for AI

- TypeScript for the backend and heart of the system

- Vue.js for the frontend as the admin panel

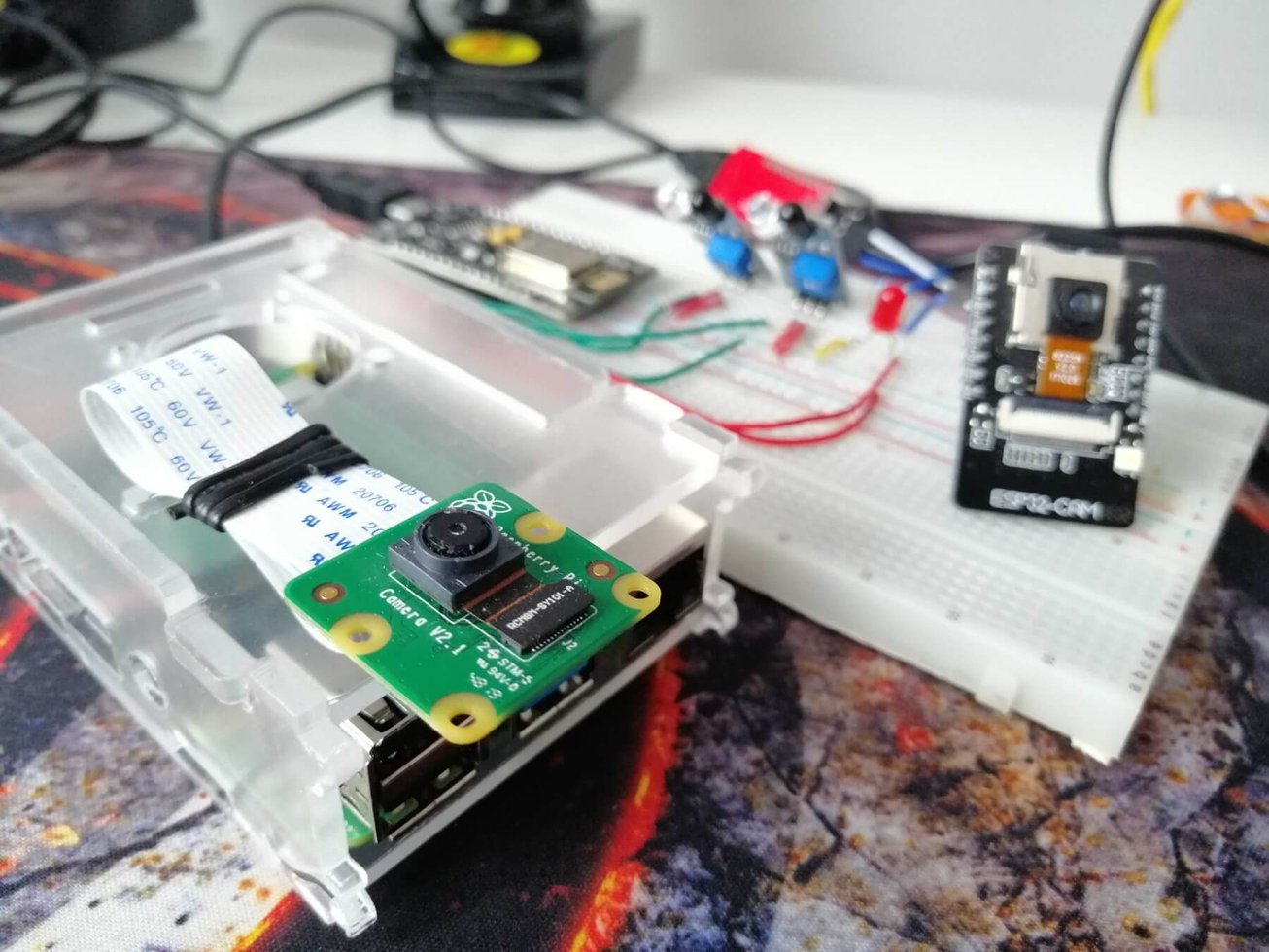

- A Raspberry Pi for handling the camera

- Arduino for counting people and opening or closing the doors

Why those technologies?

We didn't have much time, so we decided to use the technologies most of us already knew, such as TypeScript and Arduino. We'd been using Vue for our previous projects and could reuse some of our codebase. And Python is the perfect choice for AI.

We considered other technologies such as PHP with Symfony or Laravel, C#, Java, Go, or even RoR. They were all viable, but we realized we should focus on AI instead of learning these new technologies.

What was the most challenging part of the project?

There were two things I was worried about. Firstly, I had no experience with AI. I had to learn how to work with a camera, teach it to recognize objects, and write code so the machine could interpret the results. We only had three sprints to finish the project, so we couldn't spend as much time on this as we wished.

But we solved this problem by adjusting an existing library based on TensorFlow to our needs. It was one of the best decisions we made during this exercise. We learned a lot about TensorFlow: how to feed it with data, how much data it needs to start working (very little), how many edge cases you need to cover, etc.

Secondly, we were worried about the edge cases. What if someone came in wearing a bandana, a weird mask, or a mask and a vizor? What if two people came in at the same time? What if there's a fire? What if the doors are locked? These are real questions that stakeholders ask.

Like in the professional world, we prioritized the stakeholders' questions and addressed the most important ones we could realistically act on in the limited time we had. In particular, we made sure our camera could check if multiple people were wearing their masks. It wasn't so hard to implement.

There was another problem that came up right before the final demo of our solution. Our Raspberry Pi had performance issues. I'd bought the most basic version of RasPi and it just didn't have enough computational power to smoothly handle both the camera and the AI at the same time.

Our framerates dropped to 1 FPS. That was annoying (even though it did work). Fortunately, it was only an exercise, so we didn't have to take any budget into account. Buying a more powerful Raspi would've been an easy solution to this.

How was this project different from your other projects?

At X-Team, we work with professionals who have a lot of experience. This project was different because I was the expert and tech lead at a team with four other students who were just learning how to program.

I wanted to keep the processes, coding standards, QA, and artifacts typically used in a real-world project. That was fun because universities don't tend to teach you about Git or Jira. So we had to start from scratch while also keeping our three-sprint deadline in mind.

What was your end goal? What did success look like?

Our first goal was to learn new things. I learned about AI and how to combine all the moving pieces: the backend, the frontend, the AI, and the IoT with Arduino and the RasPi. My team members learned what a project really looks like when it's close to professional work and what technologies they'll likely need in the future.

The second goal was to create an MVP of a product that we were happy to show to stakeholders and, maybe, happy to continue working on in the future. I think we reached that goal. When we presented our project to the stakeholders, they really liked it. Of course, the product needs more development, but it was a great start given our tight deadline.

Sounds like you learned a lot! Keep up the good work.